This site accompanies the latter half of the ART.T458: Advanced Machine Learning course at Tokyo Institute of Technology, which focuses on Deep Learning for Natural Language Processing (NLP).

Lecture #1: Feedforward Neural Network (I)

Keywords: binary classification, Threshold Logic Units (TLUs), single-layer neural network, Perceptron algorithm, sigmoid function, stochastic gradient descent (SGD), multi-layer neural network, backpropagation, computation graph, automatic differentiation, universal approximation theorem.

Slides

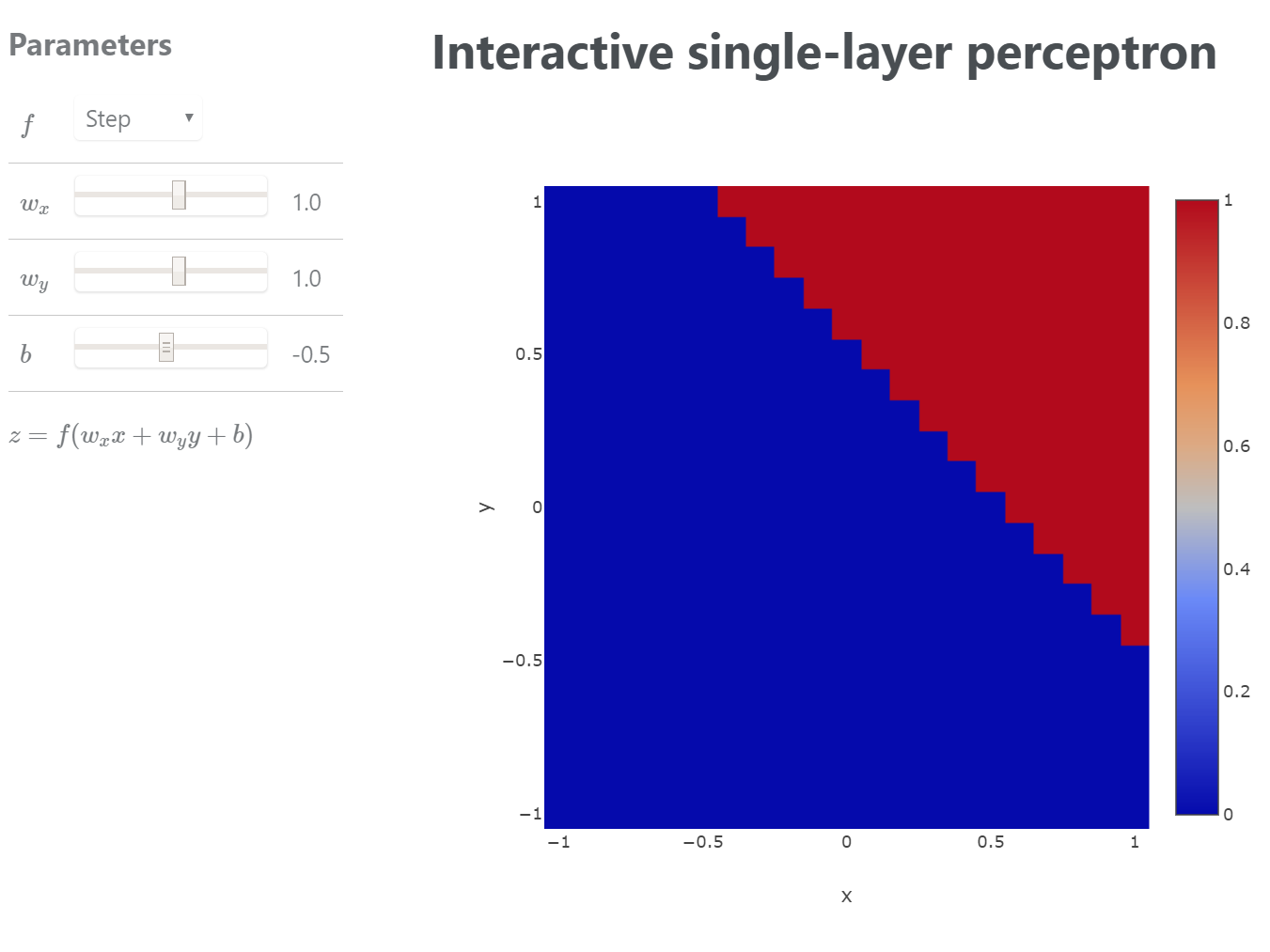

Interactive SLP model

Interactive MLP model

Implementations

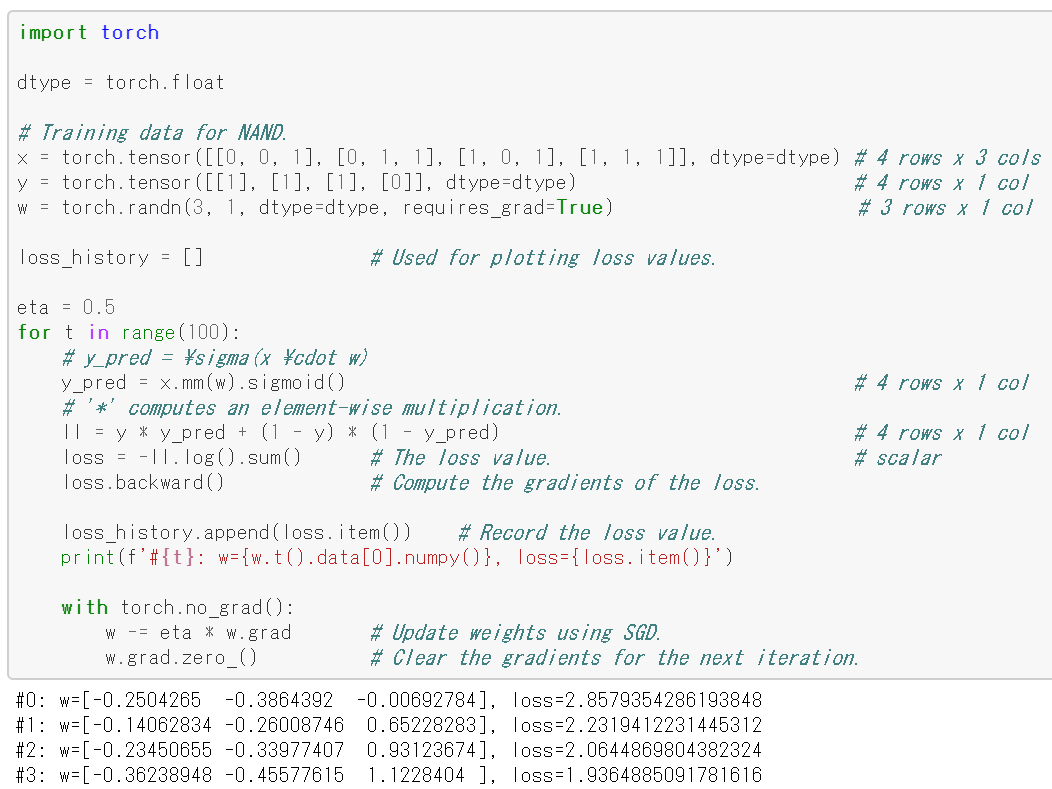

Perceptron algorithm in numpy; automatic differentiation in autograd, pytorch, TensorFlow, and JAX; single and multi layer neural network in pytorch.

Lecture #2: Feedforward Neural Network (II)

Keywords: multi-class classification, linear multi-class classifier, softmax function, stochastic gradient descent (SGD), mini-batch training, loss functions, activation functions, ReLU, dropout.

Slides

Implementations

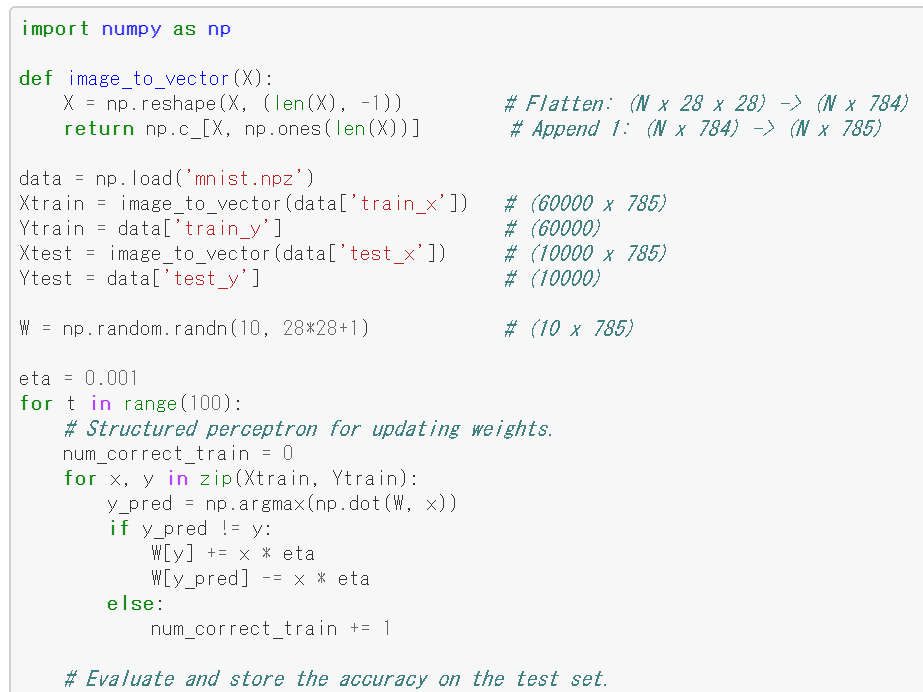

Preparing the MNIST dataset; perceptron algorithm in numpy; stochastic gradient descent in numpy; single and multi layer neural network in pytorch.

Lecture #3: Convolutional Neural Network

Keywords: Convolutional Neural Networks (CNNs), MNIST, 2D convolution, padding, stride, channels, image filter, max pooling, ILSVRC, ImageNet, AlexNet, VGGNet, ResNet.

Slides

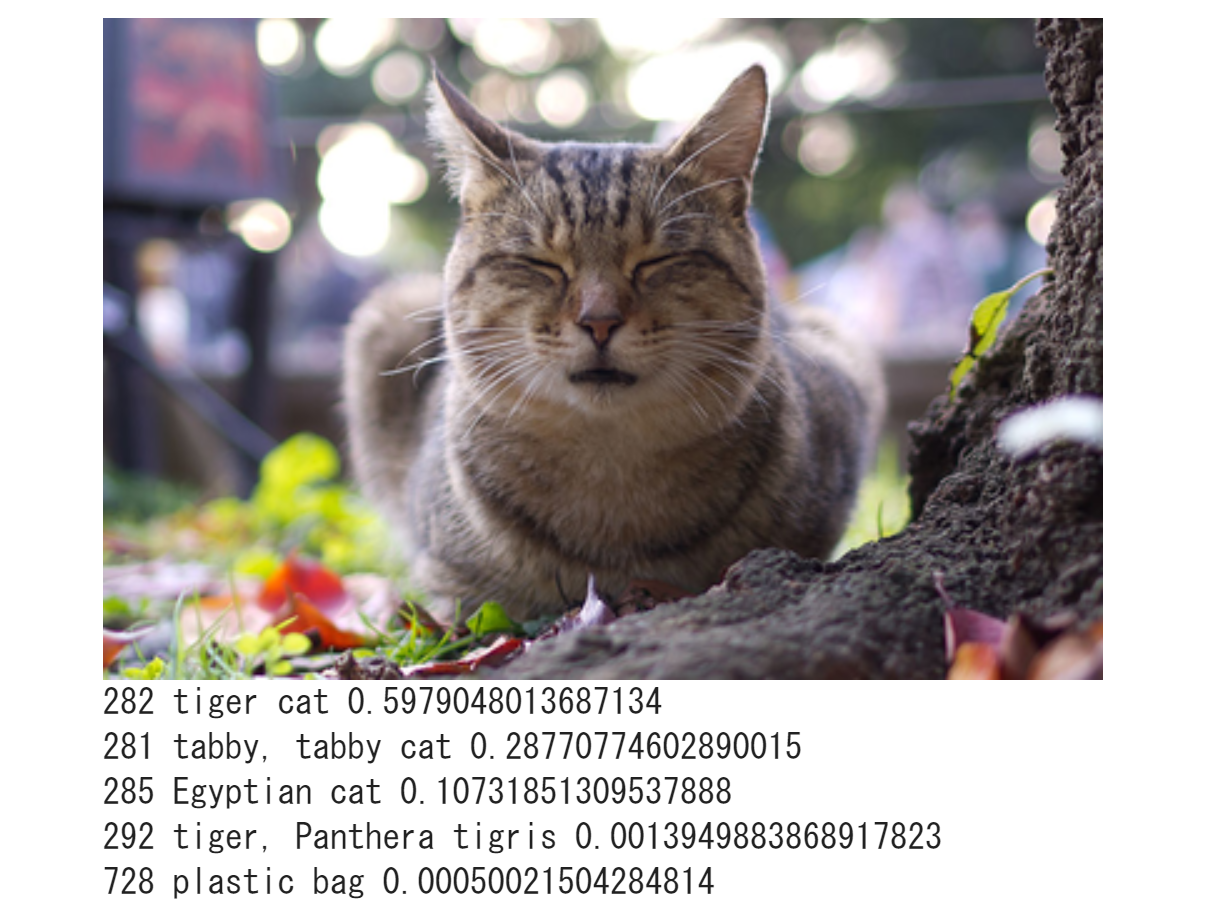

Object recognition

Classify an image using ResNet-50.

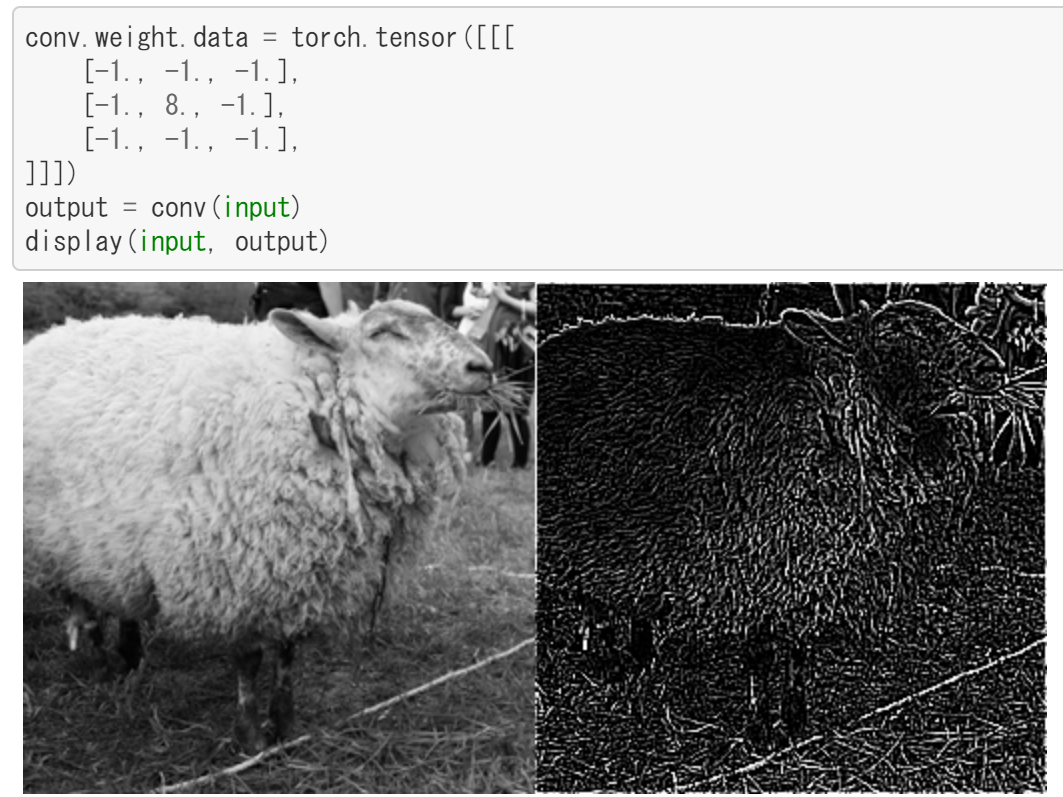

Image filters

Various image filters by manually setting values of a weight matrix in torch.nn.Conv2d.

Lecture #4: Word embeddings

Keywords: word embeddings, distributed representation, distributional hypothesis, pointwise mutual information, singular value decomposition, word2vec, word analogy, GloVe, fastText.

Slides

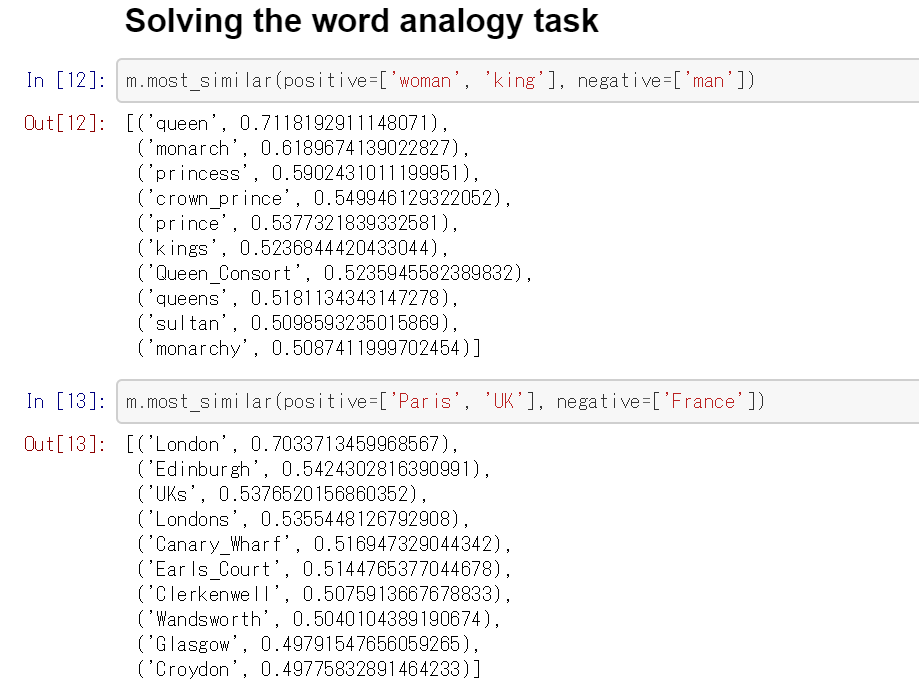

English Word Vector

Loading word vectors pre-trained on English news; computing similarity; word analogy

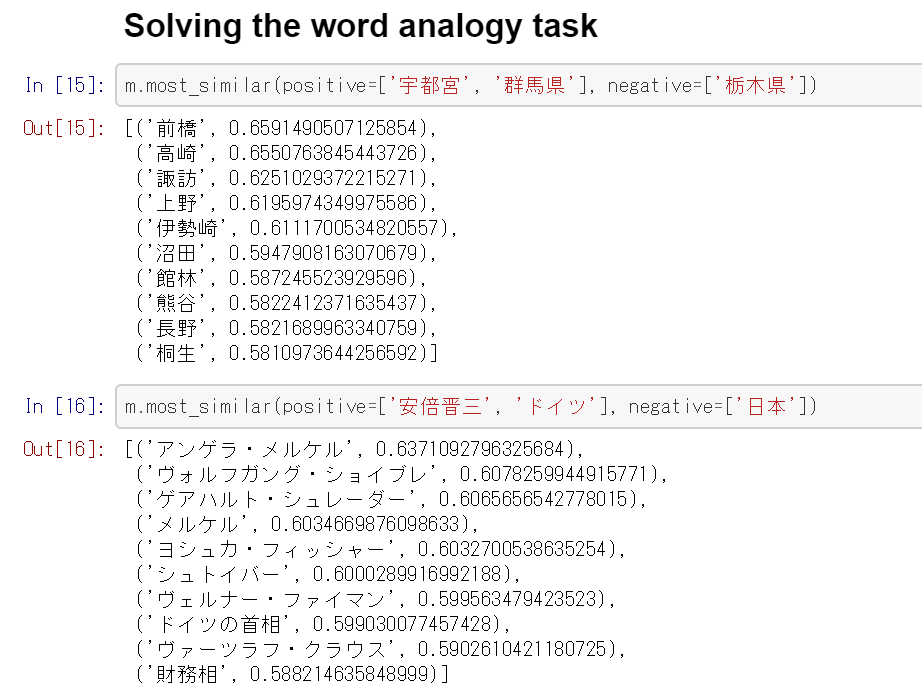

Japanese Word Vector

Loading word vectors trained on Japanese Wikipedia; computing similarity; word analogy

Lecture #5: DNN for structural data

Keywords: Recurrent Neural Networks (RNNs), Gradient vanishing and exploding, Long Short-Term Memory (LSTM), Gated Recurrent Units (GRUs), Recursive Neural Network, Tree-structured LSTM, Convolutional Neural Networks (CNNs).

Slides

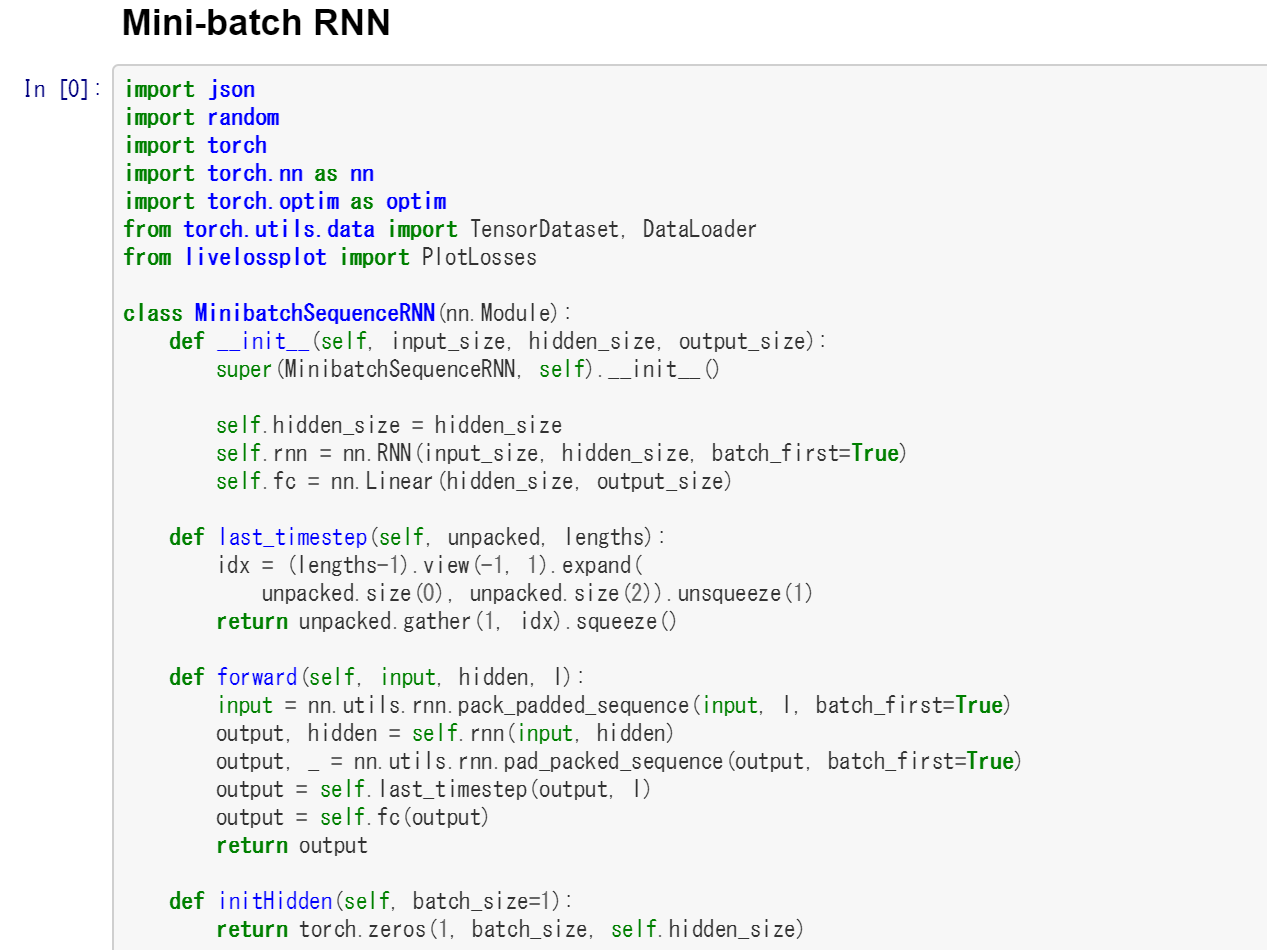

Implementations

RNN; Mini-batch RNN

Lecture #6: Encoder-decoder models

Keywords: language modeling, Recurrent Neural Network Language Model (RNNLM), encoder-decoder models, sequence-to-sequence models, attention mechanism, Convolutional Sequence to Sequence (ConvS2S), Transformer, GPT, BERT.